Overview

Getting started with large language models

See Book version here: AI Book

General Overview

Wharton’s Ethan Mollick writes the most comprehensive and up-to-date summaries of how to use LLMs for practical tasks.

His latest summary How to Use AI to Do Stuff: An Opinionated Guide is the best guide as of Dec 2023

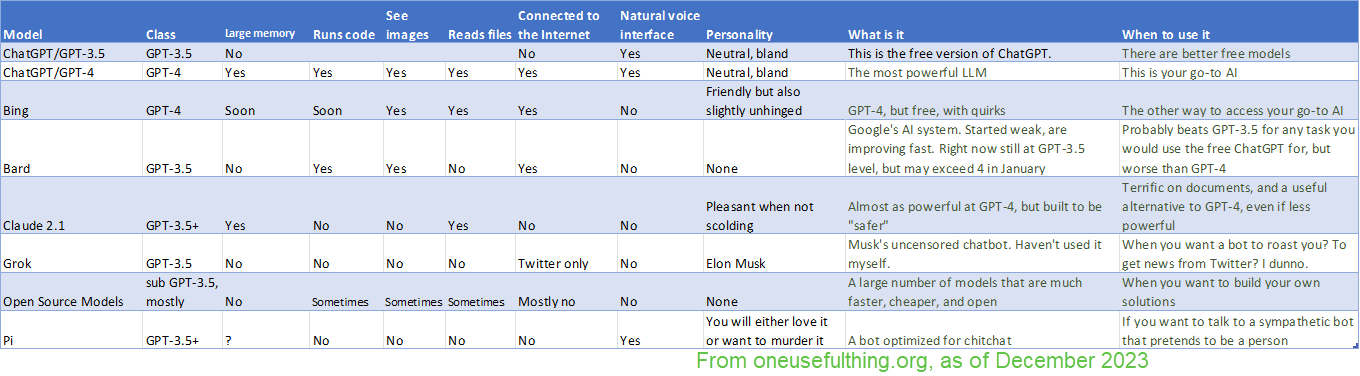

You can try all the popular LLM Chatbots simultaneously with the GodMode smol AI Chat Browser.

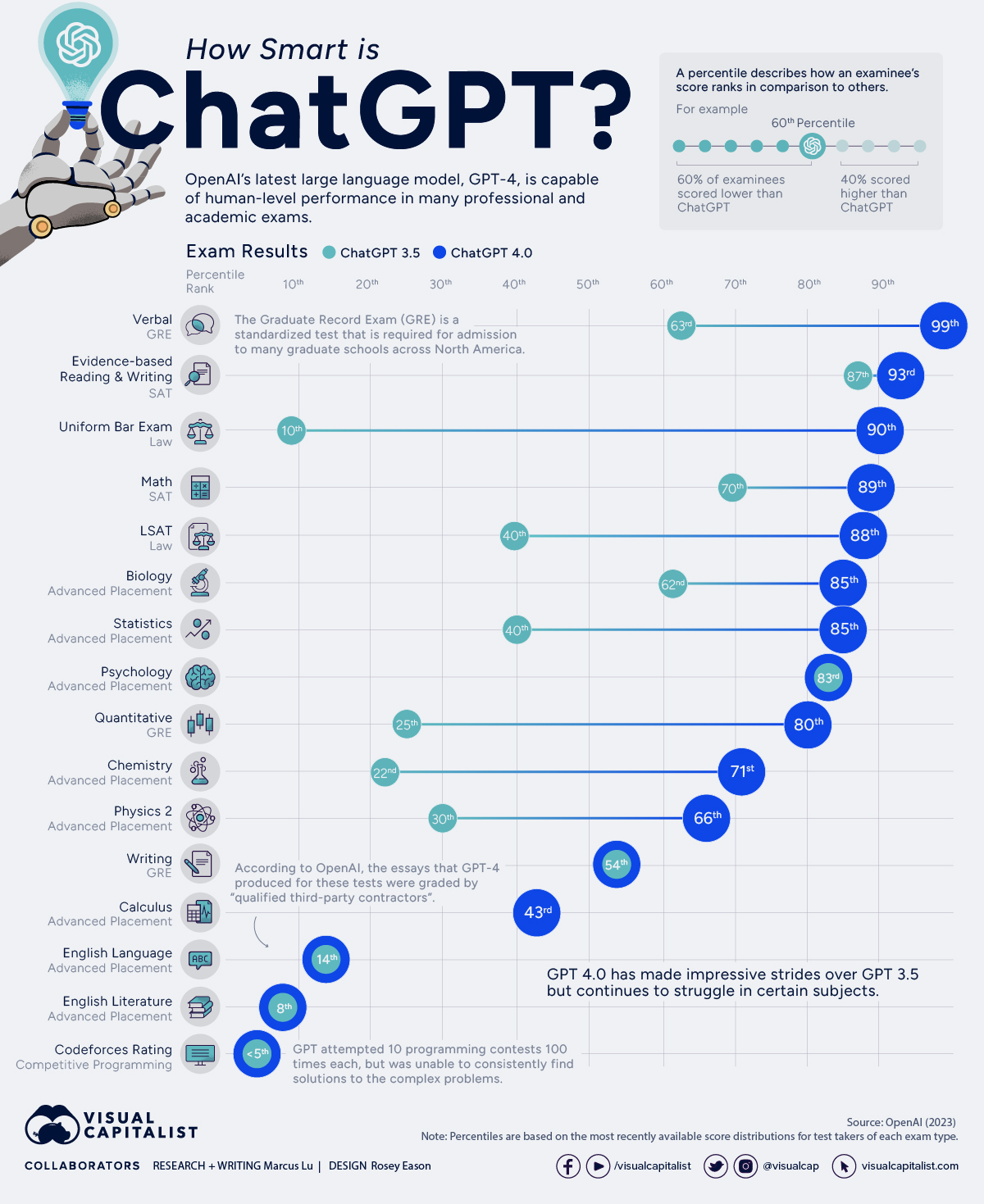

How Smart is ChatGPT

Visual Capitalist makes a nice graphic comparing ChatGPT against various standardized test benchmarks.

Understanding LLMs

Stephen Wolfram explains What is ChatGPT Doing and Why Does it Work? in a lengthy, step-by-step way. It took me a couple of hours to read – it’s very dense and technical – but the time was well-spent.

The conclusion, how LLMs point the way to a better understanding of human language and thinking, is especially provocative. And don’t miss the Hacker News discussion: Wolfram Alpha and ChatGPT

Jason Crawford asks “Can submarines swim?” with a good easy-to-understand explanation of GPT technology.

Conclusion: recognize that it’s a tool, with advantages and disadvantages over “thinking” similar to the way submarines are different from swimming.

AI Safety

Why You May Not Want to Correct Bias in an AI Model

Stanford researchers say only about half of the sentences generated by the search engines in response to a query could be fully supported by factual citations.1

And see many more arguments against claims that AI is the biggest things ever:

Freddie deBoer Does AI Just Suck? shows examples of how AI-generated art is pretty awful when set side-by-side with the human kind.

If I asked an aspiring filmmaker who their biggest influences were and they answered “every filmmaker who has ever lived,” I wouldn’t assume they were a budding auteur. I would assume that their work was lifeless and drab and unworthy of my time.

Also see Santa Fe Institute @MelMitchell1 Melanie Mitchell How do we know how smart AI systems are? who gives several examples of ChatGPT appearing to do well on some task, only to be defeated when the wording is changed slightly.

In Can Large Language Models Reason? she explains in detail many of the issues raised when asking whether LLMs do more than pattern-matching. Some of the references here seem to show good evidence that, no, these models are not reasoning but are simply good at finding similar examples from their extensive trained data sets.

What AI Can’t Do

Political orientation

AI Performance

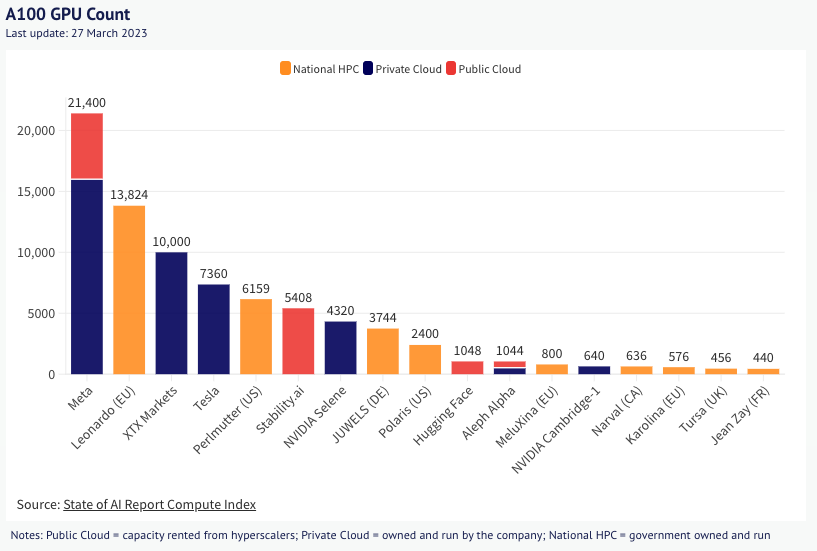

State of AI Report Compute Index tracks the size of public, private and national HPC clusters, as well as the utilization of various AI chips in AI research papers. As a key substrate upon which AI models are trained and run, the size of compute clusters and the popularity of specific chips helps us take a temperature check on the rate of progress of AI systems.

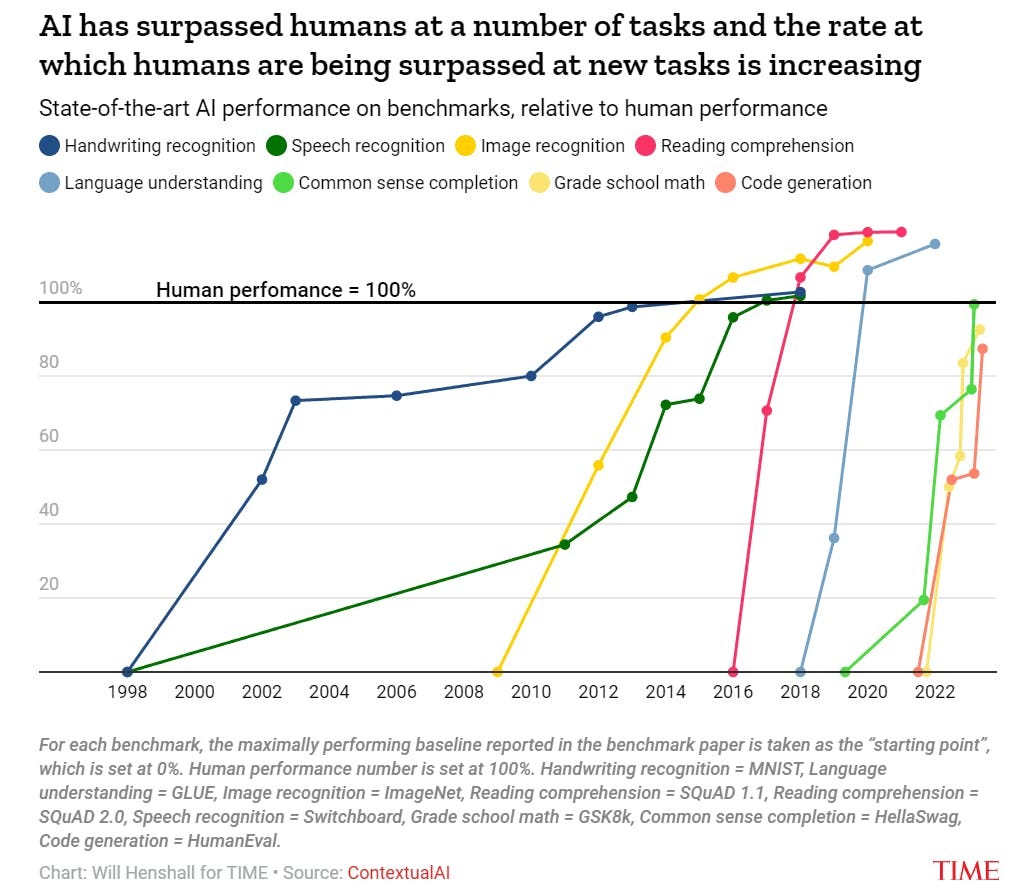

From Time (offline) why AI Is unlikely to slow down:

But AI has a memory wall problem

The rise of LLaMA.cpp was surprising to many. Why can an ordinary MacBook run state-of-the-art models only a bit slower than a mighty A100? The answer is that when you’re only doing a single batch, one token at a time, memory bandwidth is the bottleneck and the gap between the M2’s memory bandwidth and the A100’s is not that large.

History

Also see @omooretweets summary of AI in 2023

Feb 24, 2023: Meta launches LLaMA, a relatively small, open-source AI model.

March 3, 2023: LLaMA is leaked to the public, spurring rapid innovation.

March 12, 2023: Artem Andreenko runs LLaMA on a Raspberry Pi, inspiring minification efforts.

March 13, 2023: Stanford’s Alpaca adds instruction tuning to LLaMA, enabling low-budget fine-tuning.

March 18, 2023: Georgi Gerganov’s 4-bit quantization enables LLaMA to run on a MacBook CPU.

March 19, 2023: Vicuna, a 13B model, achieves “parity” with Bard at a $300 training cost.

March 25, 2023: Nomic introduces GPT4All, an ecosystem gathering models like Vicuna at a $100 training cost.

March 28, 2023: Cerebras trains an open-source GPT-3 architecture, making the community independent of LLaMA.

March 28, 2023: LLaMA-Adapter achieves SOTA multimodal ScienceQA with 1.2M learnable parameters.

April 3, 2023: Berkeley’s Koala dialogue model rivals ChatGPT in user preference at a $100 training cost.

April 15, 2023: Open Assistant releases an open-source RLHF model and dataset, making alignment more accessible.